Welcome to the resource topic for 2021/994

Title:

BKW Meets Fourier: New Algorithms for LPN with Sparse Parities

Authors: Dana Dachman-Soled, Huijing Gong, Hunter Kippen, Aria Shahverdi

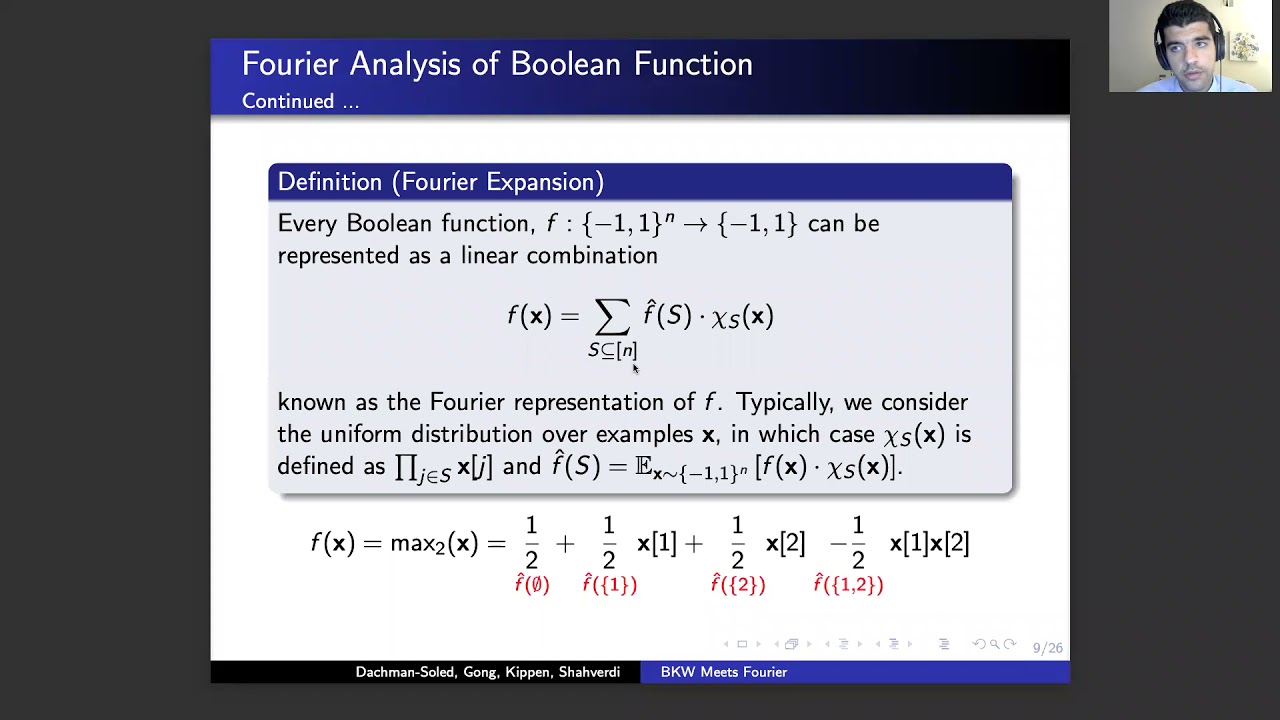

Abstract:We consider the Learning Parity with Noise (LPN) problem with sparse secret, where the secret vector \textbf{s} of dimension n has Hamming weight at most k. We are interested in algorithms with asymptotic improvement in the \textit{exponent} beyond the state of the art. Prior work in this setting presented algorithms with runtime n^{c \cdot k} for constant c < 1, obtaining a constant factor improvement over brute force search, which runs in time {n \choose k}. We obtain the following results: - We first consider the \textit{constant} error rate setting, and in this case present a new algorithm that leverages a subroutine from the acclaimed BKW algorithm [Blum, Kalai, Wasserman, J.~ACM '03] as well as techniques from Fourier analysis for p-biased distributions. Our algorithm achieves asymptotic improvement in the exponent compared to prior work, when the sparsity k = k(n) = \frac{n}{\log^{1+ 1/c}(n)}, where c \in o(\log \log(n)) and c \in \omega(1). The runtime and sample complexity of this algorithm are approximately the same. - We next consider the \textit{low noise} setting, where the error is subconstant. We present a new algorithm in this setting that requires only a \textit{polynomial} number of samples and achieves asymptotic improvement in the exponent compared to prior work, when the sparsity k = \frac{1}{\eta} \cdot \frac{\log(n)}{\log(f(n))} and noise rate of \eta \neq 1/2 and \eta^2 = \left(\frac{\log(n)}{n} \cdot f(n)\right), for f(n) \in \omega(1) \cap n^{o(1)}. To obtain the improvement in sample complexity, we create subsets of samples using the \textit{design} of Nisan and Wigderson [J.~Comput.~Syst.~Sci. '94], so that any two subsets have a small intersection, while the number of subsets is large. Each of these subsets is used to generate a single p-biased sample for the Fourier analysis step. We then show that this allows us to bound the covariance of pairs of samples, which is sufficient for the Fourier analysis. - Finally, we show that our first algorithm extends to the setting where the noise rate is very high 1/2 - o(1), and in this case can be used as a subroutine to obtain new algorithms for learning DNFs and Juntas. Our algorithms achieve asymptotic improvement in the exponent for certain regimes. For DNFs of size s with approximation factor \epsilon this regime is when \log \frac{s}{\epsilon} \in \omega \left( \frac{c}{\log n \log \log c}\right), and \log \frac{s}{\epsilon} \in n^{1 - o(1)}, for c \in n^{1 - o(1)}. For Juntas of k the regime is when k \in \omega \left( \frac{c}{\log n \log \log c}\right), and k \in n^{1 - o(1)}, for c \in n^{1 - o(1)}.

ePrint: https://eprint.iacr.org/2021/994

Talk: https://www.youtube.com/watch?v=qEOeJdH06f8

See all topics related to this paper.

Feel free to post resources that are related to this paper below.

Example resources include: implementations, explanation materials, talks, slides, links to previous discussions on other websites.

For more information, see the rules for Resource Topics .