Welcome to the resource topic for 2019/264

Title:

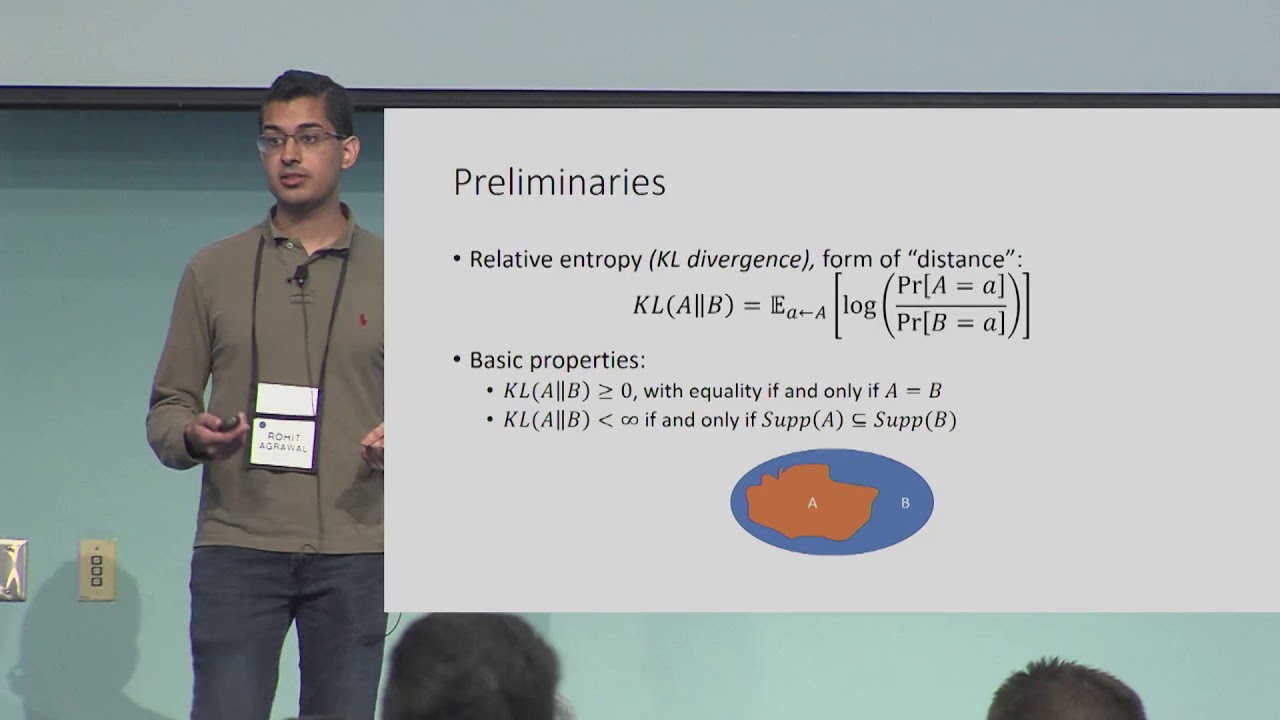

Unifying computational entropies via Kullback-Leibler divergence

Authors: Rohit Agrawal, Yi-Hsiu Chen, Thibaut Horel, Salil Vadhan

Abstract:We introduce hardness in relative entropy, a new notion of hardness for search problems which on the one hand is satisfied by all one-way functions and on the other hand implies both next-block pseudoentropy and inaccessible entropy, two forms of computational entropy used in recent constructions of pseudorandom generators and statistically hiding commitment schemes, respectively. Thus, hardness in relative entropy unifies the latter two notions of computational entropy and sheds light on the apparent “duality” between them. Additionally, it yields a more modular and illuminating proof that one-way functions imply next-block inaccessible entropy, similar in structure to the proof that one-way functions imply next-block pseudoentropy (Vadhan and Zheng, STOC '12).

ePrint: https://eprint.iacr.org/2019/264

Talk: https://www.youtube.com/watch?v=J_e2hysOPEI

See all topics related to this paper.

Feel free to post resources that are related to this paper below.

Example resources include: implementations, explanation materials, talks, slides, links to previous discussions on other websites.

For more information, see the rules for Resource Topics .